Dmitri Korotkevitch, Erland Sommarskog

Finding High identity values: what could run out of inserts

Now, if we create identity(1,1) and use INT as a datatype, at some point, the INT limit can be reached, which is -2,147,483,648 to 2,147,483,647(docs)

Now once you reach that limit, inserts would fail, causing the failure of the server, which would cost a lot of money

Now we can change the datatype, from int to bigint, but this could take hours or days, all out of business

The alternative, is to identify such issues before they happen

Now, how could we do that:

use ContosoRetailDW

go

declare @id_types table

(

datatype_id int not null primary key,

datatype_name sysname not null,

max_entry decimal(38) not null

)

insert into @id_types(datatype_id,datatype_name,max_entry)

values

(48,'TINYINT',255),

(52,'SMALLINT',32767),

(56,'INT', 2147483647),

(127,'BIGINT',9223372036854775807),

(108,'NUMERIC',99999999999999999999999999999999999999),

(106,'DECIMAL',99999999999999999999999999999999999999)

declare @tipping_percent int = 1;

with identify_dis

as

(

select

s.name + '.' + t.name as table_name,

c.name as column_name,

it.datatype_name+ iif(it.datatype_id in (106,108),'('+ convert(varchar(2),c.precision)+ ')','') as [type],

convert(decimal(38),IDENT_CURRENT(t.name)) as id_id,

case

when it.datatype_id in (106,108)

then

case

when c.precision <38

then power(convert(decimal(38),10),c.precision) - 1

else it.max_entry

end

else

it.max_entry

end as max_entry

from

sys.tables t inner join sys.schemas s on s.schema_id = t.schema_id

inner join sys.columns c on c.object_id = t.object_id

inner join @id_types it on it.datatype_id = c.system_type_id

where

c.is_identity = 1

)

select *,

CONVERT(decimal(6,3),id_id/max_entry*100.) as full_percentage

from identify_dis

where CONVERT(decimal(6,3),id_id/max_entry*100.) >@tipping_percent

order by full_percentage desc ;

So let’s break it down:

- So we are trying to find the columns that have identity as a key

- so identity could be declared as tinyint, smallint, int, bigint, numeric or decimal

- Now the first 4 have limits preset

- but the other two you set them

- Or we put the max if we could not find it like every other identity data type

- Now, first we created table varibles that has 3 things

- The datatype id in sql server in sys.columns

- The maximum value that you can enter in this datatype

- And the name of it

- And we declared a variable that sets the percentage threshold that we care about, now here we set it to 1 percent, this is too low, but we did it to detect contoso database

- After that we our common table expression, we select from sys.tables joined it with sys.schemas and sys.columns, the first two to get the table database and schema, and the last for getting the datatype

- Then we told him to get us the datatype name

- Now if the datatype is numeric or decimal, there is a dynamic set for the precision

- So we told him to get them

- Otherwise, we would get the default max_enrties that we entered

- Then we calculated the max_entry or the last value that could be inserted dynamically, otherwise it is the defaults we inserted

- Then, after that we selected the common table expression, with one extra calculation the tipping percentage

- here we try to get the last id inserted

- and after that we divided that by the max_entry allowed value dynamic or default, multiply by a hundred

- and we get the percentage we are looking for

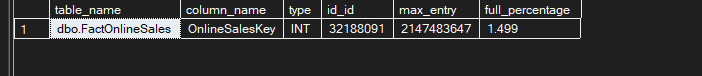

and the result was in ContosoRetailDW

So here it is clearly not an issue

the full percentage

or we could do the following:

use contosoretaildw

go

create table test_max_identity

(

id smallint identity(1,1),

col1 int null

)

;

go

with

n1(c) as(select 1 union all select 1),

n2(c) as( select 1 as t1 from n1 cross join n1 as t2),

n3(c) as(select 1 as t1 from n2 cross join n2 as t2 ),

n4(c) as (select 1 from n3 as t1 cross join n3 as t2),

ids(id) as ( select ROW_NUMBER() over (order by (select null)) from n4)

insert into test_max_identity(col1)

select id*2

from ids ;

go 10

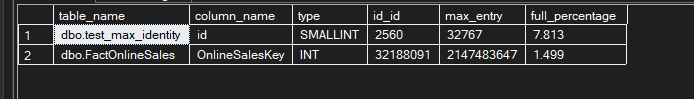

now if we run our script here:

finding overflowing sequence id columns:

in the previous script, we tried to find the columns that has sequence instead of identity

for more details on both go to our blog

Now in ContosoRetailDW do the following:

go

create sequence our_sequence

start with 1

increment by 1;

create table sequence_demo(

id smallint not null

constraint pk_sequence_demo primary key

default(next value for dbo.our_sequence),

col1 int)

---- now let's insert some

go

with

n1(c) as(select 1 union all select 1),

n2(c) as( select 1 as t1 from n1 cross join n1 as t2),

n3(c) as(select 1 as t1 from n2 cross join n2 as t2 ),

n4(c) as (select 1 from n3 as t1 cross join n3 as t2),

ids(id) as ( select ROW_NUMBER() over (order by (select null)) from n4)

insert into sequence_demo(col1)

select id*2

from ids ;

go 10

now here we created the same stuff we created before, but instead we used the more modern solution, sequence

now let’s write a script to get our values:

declare @data_types table

(

datatype_id int not null primary key,

datatype_name sysname not null,

max_entry decimal(38) not null

)

insert into @data_types(datatype_id,datatype_name,max_entry)

values

(48,'TINYINT',255),

(52,'SMALLINT',32767),

(56,'INT', 2147483647),

(127,'BIGINT',9223372036854775807),

(108,'NUMERIC',99999999999999999999999999999999999999),

(106,'DECIMAL',99999999999999999999999999999999999999)

declare @tipping_percent int = 1;

with columns_sequences

as

(

select

COL_NAME(c.object_id,c.column_id) as column_name,

OBJECT_SCHEMA_NAME(c.object_id) as schema_name,

OBJECT_NAME(c.object_id) as table_name,

d.referenced_schema_name as sequence_schema_name,

d.referenced_entity_name as sequence_name,

c.system_type_id as datatype_id

from

sys.sql_expression_dependencies d inner join sys.default_constraints dfc on dfc.object_id = d.referencing_id

inner join sys.columns c on c.object_id = dfc.parent_object_id

and c.column_id = dfc.parent_column_id

where d.referenced_class = 1

and exists

(

select 1

from sys.sequences sq

where sq.name = d.referenced_entity_name

and sq.schema_id = SCHEMA_ID(d.referenced_schema_name)

)

)

select

cs.schema_name + '.' + cs.table_name as column_name,

cs.sequence_schema_name +'.'+ cs.sequence_name as sequnce,

dt.datatype_name as datatype,

se.current_value as current_sequnce_value,

dt.max_entry as max_of_the_column_or_max_entry,

CONVERT(decimal(10,3),(CONVERT(decimal(38),se.current_value)/dt.max_entry*100.0)) percent_used

from columns_sequences cs inner join sys.sequences se on se.name = cs.sequence_name

and se.schema_id = SCHEMA_ID(cs.sequence_schema_name)

inner join @data_types dt on dt.datatype_id = cs.datatype_id

where (CONVERT(decimal(38),se.current_value)/dt.max_entry*100.0)>@tipping_percent

order by percent_used desc ;

so let’s break it down:

- the table variables do not differ in any way nor the threshold

- now we want to find the columns that uses the default object and their sequnces

- so we need 4 system catalog views, sys.columns, sys.defualt_constraints, sys.sql_expression_dependencies, and sys.sequnces

- so we joined them

- now in the common table expression, in the where clause we have the d.referenced_class = 1 which means it is schema scoped, so this sequnce is bound to a schema, so sequnces that are not will not be found

- if you want to do that you can just delete the whole claues and modify the query accordingly

- we join on the referencing_id = the id of the table default constranit referencing the defualt constraint = object_id

- the column is the parent of the of the constraint so that is the second join

- the referenced is the sequence

- so we are golden for the CTE

- in the last select statement we added the table variables

- the datatype_id to get the datatypes we want for the id columns

- their max_entry values

- and the threshold calculation to see how much they exceeded it

- and the current value of the id

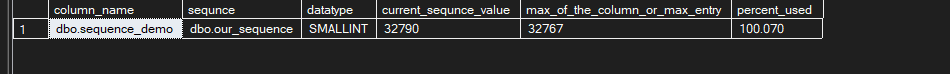

and that is it, our output was like this:

so we ran the code of creation more than one time, obviously it is not the 100.070 but this is a product of the rounding, we can still insert some more since it is not full yet

but the script is working so we are golden.

but what if the sequence ran out of numbers, since it is a different entity

how could we identify it? another blog.